Introduction

“We stay hungry” is one of our core values at celebrate company. Hence, it is no wonder that we are always interested in getting to the bottom of the methods we use. To this end, we regularly discuss scientific articles in our AI group. This post summarizes our discussion of the ACL 2020 paper Named Entity Recognition without Labelled Data: A Weak Supervision Approach. You can find the full text here.

In a nutshell

The paper proposes a solution to the situation where you want to detect entity names in your documents, but you do not have (sufficient) labeled data to train a statistical Named Entity Recognition (NER) model. Existing models that have been trained on other domains (e.g., news texts) will perform subpar if the texts from your target domain are completely different. Existing domain adaptation solutions, such as AdaptaBERT, that make use of large pretrained language models can be very expensive to use — both for training and for inference. In contrast, this paper suggests a lightweight solution that can be used with any machine learning model.

Proposed workflow

Create labeling functions for automatic annotation

Train HMM (hidden markov model) to unify the different, sometimes contradictory, annotations

Train the sequence labeling model of your choice on the unified HMM annotation

Illustration of the weak supervision approach (taken from the paper).

Open-source library

The techniques in this paper are implemented in the open-source library skweak. You can find it on GitHub or PyPI.

In 2021, the library also made it to ACL as a system demonstration.

Why are we interested in it?

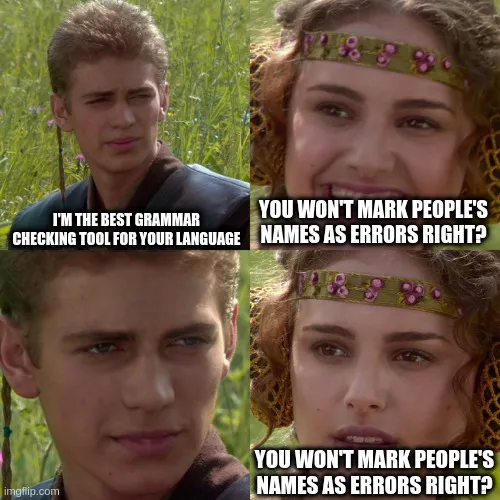

At celebrate, we offer a high-quality (grammatical and stylistic) text check to buyers of our print products. To help our order checking agents in this task, we rely on NLP software to mark likely errors in a customer’s text. Names are notorious candidates for false positives in this process because they are open-class items and tend to look different from other common nouns. So detecting names in customer texts and treating them differently helps our agents focus on more likely error candidates.

Furthermore, it goes without saying that texts written on a wedding invitation or a guestbook are very different from other domains, such as news texts, where pretrained NER models are readily available.

The details

Labeling functions

Anything you can code can be a labeling function. You can call one or many out-of-domain NER models, look up words in a list of known names you are interested in (e.g., extracted from Wikipedia or handcrafted by your domain experts), or you can invent your own (heuristical) algorithm. In that, again, you are completely free to rely on any other NLP tools you might find for the language you are interested in, such as POS taggers or syntactic parsers. An important thing to note is that labeling functions do not need to be perfect. It is the job of the aggregation model to weigh different (possibly contradictory) annotations against each other, estimate the accuracy of different labeling functions, and unify everything to a single most likely annotation.

You can find examples in the skweak documentation here.

Aggregation model

The proposed aggregation model is a Hidden Markov Modell (HMM) with multiple emissions. The latent states of the HMM correspond to the true underlying labels (which we don’t have because we do not have labeled data) and the emissions correspond to the observed output of the labeling functions. Since we have multiple labeling functions (the more the better), the HMM needs to have multiple emissions.

Illustration of the aggregation model (taken from the skweak GitHub repository).

After annotating some large amount of unlabeled data with the labeling functions, the HMM is trained on these noisy annotations. Then the trained HMM can be used to annotate the same data. Note that the HMM only models the emission probabilities for each labeling function given a latent state (what will the output of each labeling function be if we know the true underlying label) and the transition probabilities between states (what NER labels are more or less likely to follow a particular NER label). Notably, no word features are considered. These should all have been exploited by the labeling functions (and therefore be present in their output).

Experimental results

Intheir experiments, the HMM-aggregated labels consistently outperform very strong baselines, among others the aforementioned AdaptaBERT and even an ensemble of 5 (!) AdaptaBERT models, while being a lot cheaper especially during inference. They also show that the HMM-aggregated labels can be used to train a neural network that has very similar performance to the HMM, but does not rely on the labeling functions being available at inference time. In the corresponding [GitHub repository](https://github.com/NorskRegnesentral/weak-supervision-for-NER), there are many examples of architectures that work well.

For more information on the models and experimental setup, have a look at the paper (link at the top of this page).

What we think about it

In our opinion, weak supervision is a very attractive approach for use in industry, especially because of its flexibility and cheapness. Skweak is a great library for NLP because, in contrast to alternatives like Snorkel, the dependency between tokens in a sequence is taken into account. We also see a link to the data-centric movement in AI where working on the data is equally or more valued than working on the model itself. Building labeling functions from expert knowledge to automatically annotate a large amount of data in a consistent way is also an acknowledgement of the importance of annotated data for model training.

In our own experiments, the performance of the HMM-aggregated labels wasn’t that good out-of-the-box but the automatic annotations proved to be a great starting point for manual annotation. A few manually annotated examples can already go a long way even if you do not make use of the few-shot capabilities of the latest large language model. It depends on the complexity of the task and it might be worth it to reconsider your data needs if your only goal is to distinguish between names and non-name words vs. the goal to distinguish between a large variety of named entity classes (such as person, location, organization, product, money, quantity, …).

Even for simpler variants of the NER tasks, however, the recipe of pretraining on the automatic labels and fine-tuning on a few manually annotated examples consistently led to performance gains for us.

We also found that the potential of using distributional features, e.g., embeddings from a pretrained word2vec or fasttext, should never be underestimated, especially in a setting with few annotated data. Skweak does not make use of it in its aggregation model but, in our experience, a model trained on the resulting noisy annotations should not miss out on it.